Introduction

Creating a product library that can be quickly searched and accessed is integral to maintaining an online catalogue of goods and services. Products may be added, reports can be generated, and quotes can be generated from within the library. The ability to save and retrieve data and information from the libraries is, thus, crucial. This information can be accessed and modified using the APIs provided by the product.

The Product Detail API allows products to be fetched through an API request. It provides the ability to get a specific product ID, search and filter by product ID, and return a list of available products and their IDs.

The Product Detail API allows developers and merchants to access detailed information about merchandise stored in the product library. This includes the price, stock level, and total quantity of a particular product. The retrieved information can then be used to create reports and generate quotes.

What is Product Detail API?

The product API is an interface often used to manage catalogues of products. The API pulls information from a product page, such as images, product IDs, prices, product specifications, and brands. They also help make products more visible and let users send requests straight to the product database.

These APIs are made to manage the product details. They ensure that every available product you or your customers have is represented as an entry in a library. It is also possible to fetch specific product IDs from the API and use it in your products.

How does it Work?

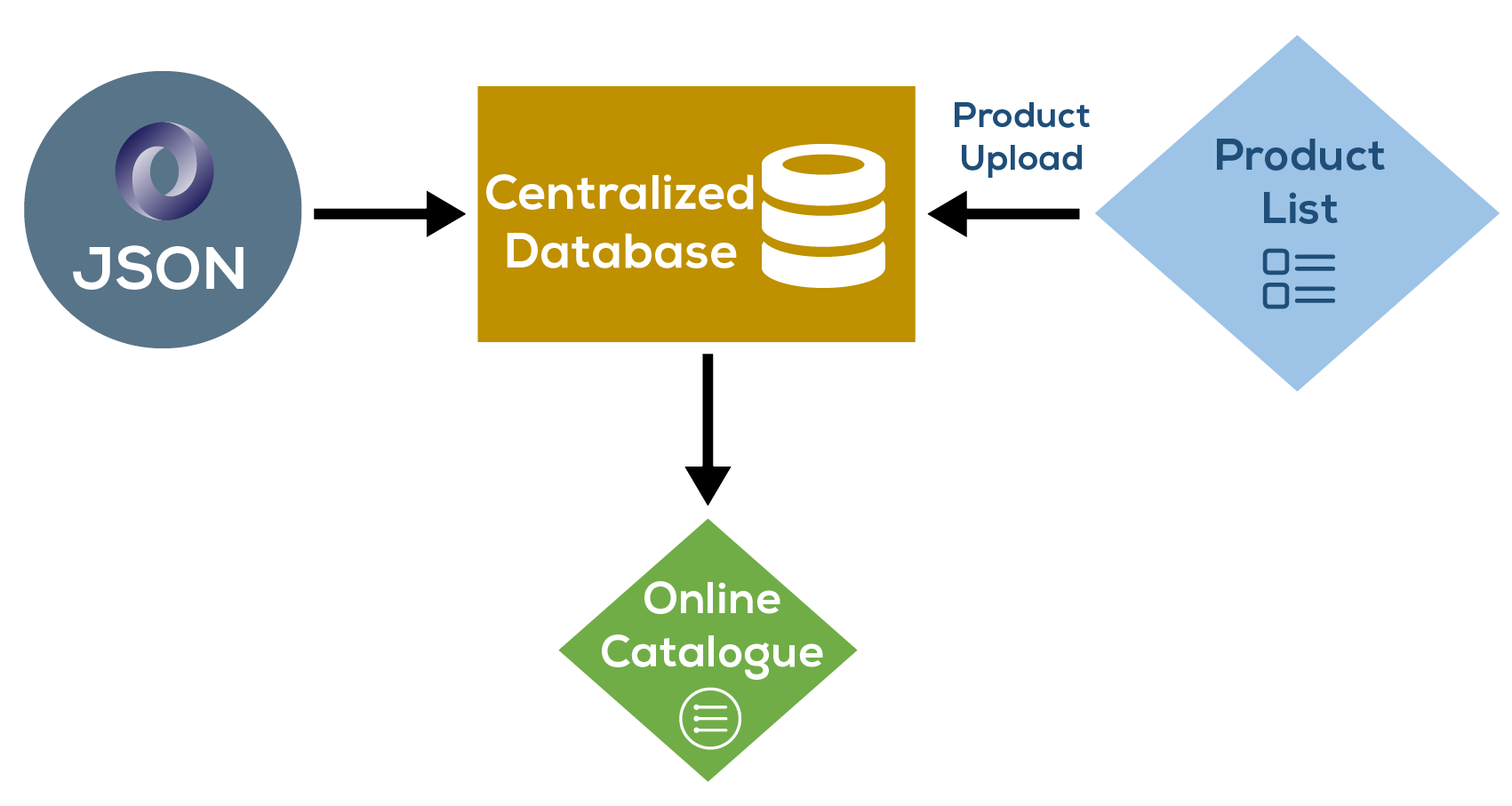

The Product detail API uses JavaScript Object Notification (JSON) and other mechanisms to organize and maintain its data. A product list can be uploaded to a centralized database, and we can use the whole thing to generate an online catalogue. Requests are made to the catalogue server via the endpoints, where the GET technique is used to retrieve data from the underlying database. In response to a request, the API will provide a JSON object containing the requested information after first checking the content-type header. The PUT method is used to save changes to product details like price, whereas the POST method is used to establish a new category. Finally, the DELETE action permits the removal of unwanted comments.

Who Should Use Product Detail API?

Those looking to build apps for use with eCommerce platforms can do it with the help of the product detail API. In addition to using APIs to control their product catalogue data, e-commerce platforms also utilize them to promote their services. This API can also benefit customers shopping online or researching a product selection. As more businesses and developers use large data sets, the demand for product APIs will continue to grow.

Benefits of using the Product Detail API

Using the Product detail API can help to organize your stock information, which helps you denormalize your database. This can be done by creating a new row in an existing or new table, where certain information is stored separately from other product data. This helps you avoid duplication and easier retrieval of information about specific products.

It also makes it easier to include applicable information in your databases, such as manufacturer details, supplier information, and other relevant notes. By storing this data separately, it can be retrieved more quickly. This is especially helpful when creating a searchable online catalogue of certain products.

Not only does it help to organize your product information, but you can also use it to create a full-blown shopping cart for use with eCommerce sites. Storing this product data separately makes creating reports and generating quotes easier. The API can also implement complex filtering and sorting capabilities in your applications.

It is important when using the API to store all information in separate tables so you can easily retrieve specific information about each product. This will help curtail possible errors in the database and make it easier to generate reports and access relevant data about each product on time.

The Product Detail API is ideal for developers who work with eCommerce platforms. This is because the API can supply the correct information about a product that a merchant wants to be displayed on their website. This makes it easier for merchants to promote their products and increase their conversion rates.

The Product Detail API allows developers and merchants to access detailed information about merchandise stored in the product library. This includes the price, stock level, and total quantity of a particular product. The retrieved information can then be used to create reports and generate quotes.

In addition, you can easily include this API in any custom-built applications you develop for your business or your clients. It allows developers to take advantage of useful data from your product catalogue and use it in their products and applications.

What Can Product Detail API do?

When used by programmers, the product detail APIs facilitate the creation of programs that can pull data from and update product catalogues. Clients gain since they can research products before and while making purchases. The E-commerce sites should be able to quickly add, modify, and remove items from their catalogues. They can use it to organize their product information databases better. When used by merchants, it allows vendors to control better and track their inventory.

Examples of free Product Detail API

The following are some examples of the best product detail API:

- ASOS: This API enables users to manage their website’s content and add and update products and pages. It allows for further customization of their websites.

- Forever21 API: Forever21 information can be requested via the API. This interface can receive JSON replies from categories, goods, searches, details, and lists. Authentication through API Keys is essential.

- HM – HennesMauritz API: Like the official H&M websites, this API allows you to search for data on regions, categories, goods, etc.

- Product Data: Product data API allows you to access product data, search for products and manage your product catalogue. Product Data includes anything about it that can be seen, touched, or measured and organized in some way. While products have no one-size-fits-all data structure, you can use data extraction and dynamic definition tools to handle your inventory better.

Product Detail API Software Development Kits (SDKs)

The following Multiple programming languages and software development kits (SDKs) are supported and made accessible for developers to use with all Product Detail APIs:

PHP

Python

Objective-C

Node.js

Java (Android)

Ruby

C# (.NET)

cURL

Conclusion

In conclusion, the Product Detail API is a programmable interface that allows developers to access detailed information about products stored in the product library. The retrieved data can then be used to create reports and generate quotes. It can help developers build better products and applications for their clients and users.

The Product Detail API helps you categorize and organize your products and suppliers securely, efficiently and effectively. Using this API, you can create shopping carts for eCommerce systems, giving you full control over all information about your products.

All developers should be aware of this useful set of APIs. It will improve how your clients’ and users’ products and reviews are displayed and how their inventory is stored within the platform. In addition, the product detail APIs are also suitable for merchants who want to provide enhanced features to their clients and increase their conversion rates. Developers and businesses often use these APIs in the eCommerce industry.